Lights, camera, action!

When Framestore retired the Quantel Paintbox and the Harry in the early '90s, its replacement—SGI's imposing Onyx system—had become the industry standard. Onyx visualisation systems, which were the size of refrigerators, were capable of supporting up to 64 MIPS R4400 microprocessors running at speeds up to 200MHz and could run up to three streams of high-resolution 3D graphics using its unique "RealityEngine2" graphics subsystem. The Onyx was an extremely powerful machine, particularly when compared to consumer PCs; a typical PC at the time would have been powered by an Intel 486 chip running at just 33MHz and, if you were lucky, a whole 8MB of RAM.

The price for such power was high: an Onyx system running Discreet Logic's (now Autodesk's) "Flame" image compositing software could cost hundreds of thousands of pounds, with company deployments running into the millions.

"The early days were dominated by the first-wave workstation companies, almost exclusively SGI," says Framestore's CTO Steve MacPherson. "These systems were beautifully engineered and presented truly unique capabilities to the early adopters. Dynasties were built on the ability to mortgage the house and purchase an Onyx running Flame, including Framestore, MPC, and The Mill. This capacity and capability are now routinely available and can run quite effectively on a well-configured laptop."

Indeed, as Moore's law continued throughout the late '90s and early '00s, companies like Framestore began to move away from pricey workstations towards far cheaper desktop machines that made use, in part, of regular PC components. SGI, once on the cutting edge of computing and valued at $45 a share in 1995, found itself being delisted from the New York Stock Exchange in 2005 when its shares dropped to a miserly 46 cents a piece.

Today, VFX studios are powered by Intel processors, Nvidia graphics cards (all the VFX studios I spoke to used Nvidia graphics cards, citing reasons of driver stability), and Linux operating systems. These parts are just as accessible to consumers as they are to businesses: one only need look at the rise of YouTube and high-quality homemade videos to see just how far the commoditisation of VFX technology has come.

But for all the digital wizardry that studios like Framestore and MPC employ, much of their work is grounded in traditional filmmaking techniques. CGI is about building sets, lighting them, filling them with actors, and recording the light that bounces back off them through the lens of a camera. That it all exists as a series of 1s and 0s merely allows for greater flexibility in the telling of a story—and filmmakers know it. 20 years ago VFX might have made up 10 percent of the total budget. Today, it can be as much as 40 or 60 percent.

"Directors often call us before the film is even settled, to try and talk about ideas," says MPC's Fagnou. "In Godzilla for example, Director Gareth Edwards was here before he even spoke to Warner Brothers. Gareth really wanted to make the film but wasn't quite sure how to go about it or sell it to the big studios, so we did some tests for them. He really liked it and got the film approved, and then we did a large chunk of the film for him.

"When you hear the story about Avatar, it was that James Cameron had this really great idea, and he pitched it to people and they all told him that it wasn't possible. But at some point, VFX made it possible, and he told a great story. I consider us great enablers of the filmmakers."

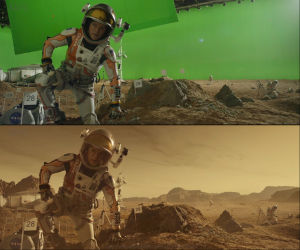

Despite being plastered with the "post-production" label, in a typical film—a mix of live action and CGI—VFX studios often send visual effects supervisors to work with directors before shooting begins. Once filming does begin, the studio is given information about builds—that is, scenes that require VFX work—allowing them to plan if a CGI creature needs to be built or if an entire set needs to be replaced. The latter is surprisingly common, according to Framestore's Sargent, even in films that aren't typically shot against a green screen. After all, why bother shutting down an entire street and editing out wires and cameras when you can just recreate it digitally and shoot at any angle you like?

A build phase follows where the environments are modelled and where digital doubles for stunts, or digital actors, are made. Wireframes are created onto which textures are applied, and rich surfaces like hair and cloth are added. All the while, the director edits the film, making cuts to various scenes before producing a "turnover," a sequence of film that's ready to be shared with the VFX studio. This in turn is used to make a "plate," a collection of images from the film onto which the studio can position a virtual 3D camera in the same position as the real camera. With perspectives maintained, virtual set assets can be merged with film footage, creating a layout from which other artists—particularly character artists—can continue to build on.

Environments are added in, either as matte paintings or as 3D models, and animation is finalised. The last step, but by far the most important, is lighting. A lighting team will take each element of a scene—the camera, the set, the geometry that has been laid out, the animated characters—and begin placing lights, either to match the lighting already in place from the real-world footage, or to create new effects entirely.

It is an extremely time intensive process. Creative considerations aside, even the most powerful workstations are unable to simulate the complexity of lighting in real time. Imagine for a moment a simple scene: an empty, square-shaped room with white walls, in the middle of which sits a shiny red ball. Shining just a single light on that ball and accurately simulating those rays of light requires millions of calculations. How strong is the light source, and what colour temperature is the light? Which direction is the light coming from and what kinds of shadows does the ball cast as a result? How reflective is the surface of the ball? Does it change the colour temperature of the light as it bounces off the ball? Do the walls reflect any light? If they do, how does that affect where each light ray travels? Does it have any impact on the shadows being created? The list goes on.

These complex calculations are handled by ray-tracing algorithms, which track rays of light from a light source to an object, and then calculate how many times it is absorbed, reflected, or refracted by various surfaces. These calculations, dubbed "bounces", can strain a processor to breaking point. Limiting the number of bounces helps—a technique that's been used in recent video games like The Tomorrow Children (PDF) to create more realistic real-time lighting—but for the high-fidelity rendering required in films, each frame is sent to a render farm that could contain tens of thousands of CPU cores, all pooling their collective processing power. Even then, it can take several hours to render just a single frame.

Just a tiny increase in processing power can dramatically reduce the rendering time—and with certain Hollywood studios now demanding turn-around times of as little as six months (according to MPC), every second helps. This thirst for speed has led to VFX studios like New Zealand-based Weta Digital assembling render farms on a vast scale. For Avatar, Weta put together 34 racks of computers, with each rack containing four servers with 32 CPUs each. In total, it used a staggering 40,000 processor cores along with 104 terabytes of memory to produce the stunning 3D visuals of the film.

Framestore, which spreads its adiabatically-cooled render farms over various locations in central London, even has its own dark fibre network that uses "coarse wavelength division multiplexing", or CWDM, which is a way of squeezing multiple signals down a single fibre optic cable—for sub-millisecond latency and 10Gbps connection speeds.

High-end graphics cards, much like the ones in hyper-powered PCs that are used to play the latest video games, are increasingly finding a home in VFX studios too. The sheer number of simultaneous calculations required for ray tracing and global illumination, as well as in physics and fur modelling systems, are a perfect fit for the parallel processing chops of the latest graphics cards. Workstations equipped with Nvidia Quadro M6000s—the professional version of the prosumer-focused Titan X—are being used to generate real-time previews of 3D scenes, foregoing the laborious process of sending a scene out to a render farm, or waiting for it to render locally on the CPU. But for all the benefits the GPU brings to individual artists toiling away on their workstations, the final product—the high-resolution, cranked-to-11 images—are the product of thousands of CPUs.

reader comments

56